- US - English

- China - 简体中文

- India - English

- Japan - 日本語

- Malaysia - English

- Singapore - English

- Taiwan – 繁體中文

Invalid input. Special characters are not supported.

Recently, there’s been a wave of AI announcements around “memory” and context lengths.

- Meta released its Llama 4 models, which support up to a 1-million-token context window for the Maverick model and a staggering 10-million-token context window for the Scout model.

- OpenAI announced that ChatGPT will now remember things from previous chats, making the application more useful.

- At GTC, NVIDIA announced the Dynamo library for inference routing and KV cache management.

In this blog, I review what these announcements say and what they mean for systems architects designing an AI factory.

Long contexts represent “the good”

When most of us interact with a large language model (LLM), we write a query and get a response. The total size of the input and output is the “context window” for the query. In the chat use case, the inputs and outputs are normally in the 100 to 1,000 token range.

As hardware becomes more capable and as models are developed to support larger context windows, we’re finding use cases where this context window is important.

The one I interact with daily is my coding assistant. When I need code generation, I want the model to understand my existing code base. I can accomplish this need by adding existing code to the context of a generation request. By using more of my code as context, the coding assistant can work at a higher level and make larger architectural changes to my codebase.

Essentially, a longer context window leads to a smarter AI coding assistant.

Other use cases including accessing emails, SharePoint sites, medical records, legal proceedings and more. It’s easy to see why having more data available leads to better output.

What are prefill and the KV cache?

Now that we know why we want larger context windows, we need to cover a concept that’s foundational to modern LLMs — the key-value (KV) cache.

All the major LLMs in use today use the Transformer architecture and each inference query follows the same steps:

embedding -> prefill -> decoding -> post-processing

The embedding phase consists of turning a text query into the vector representations that an AI model can operate on.

The prefill phase consists of running the query (and any provided context like supporting text or lines of code) through the model, digesting it by evaluating every subword and generating internal representations. Part of these representations are a set of vectors called the key (K) and value (V) vectors.

The decoding phase is where the fun happens. It takes the data from the prefill phase and starts generating new tokens (words) in response to the query. Those K and V vectors from the prefill phase can be recalculated for every newly generated token or cached and reused. During decoding, we create new KV vectors while using the previous vectors to calculate each new token.

Finally, the post-processing phase takes the tokens generated during decoding and converts them back to human-readable text.

An important insight from this breakdown is that an LLM cannot start generating new tokens until the prefill phase is complete. This constraint means that, if prefill takes significant time, then the user’s experience is directly affected.

Prefill takes memory and time

My colleague, Katya Giannios, who has a doctorate in Applied Mathematics, has been modeling system architectures for inference so we can estimate prefill times for some scenarios.

Disclaimer: These are estimates; we’re still running tests in our lab to better understand the system.

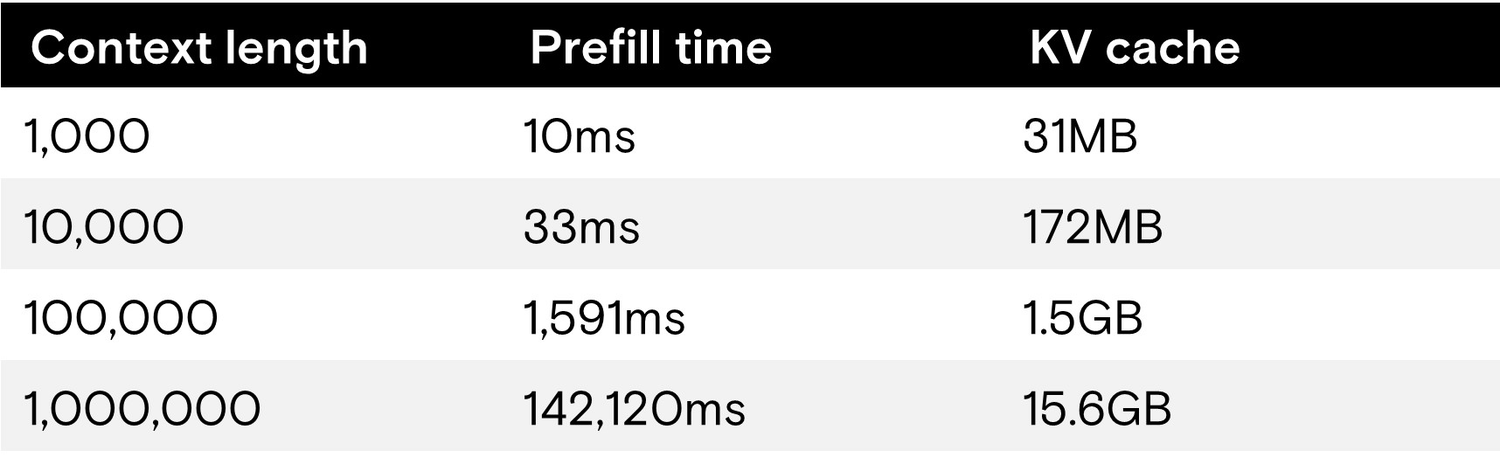

The following table looks at the inference performance of:

- Meta’s Llama 4 Maverick model

- 400 billion parameters

- FP8 weights

- Maximum context window of 1,000,000 tokens

- Running on an NVIDIA DGX B200 server

- 8x NVIDIA B200 GPUs

- 1.4TB of HBM3E

- Single user

- 1,000 token output

We’re using a single user to keep things simple, but multiple concurrent users would increase the load and KV cache size considerably.

We see that small contexts are easily handled with prefill times under a second up to 10,000 tokens (and beyond). But when we’re at the maximum context length, the prefill time (the time to first token) is over 2 minutes before the LLM can start generating any output.

If we multiply out to 10 users who each have a context of 250,000, the prefill time is over 30 seconds, and the total KV cache size is 39GB.

This is the ugly side of large contexts — that computing the KV values for long contexts significantly influences the user experience. Unless we can improve prefill time, long contexts will not be appropriate for interactive use cases.

Why should we reuse KV caches?

If we go back to my original example for using a long context, AI coding assistants, we expect that the context for successive queries will have significant reuse. As the coding assistant generates methods on a class, the base class will be accessed for every query.

Instead of generating the KV cache for every query, we should generate it once and then reuse it for successive queries. The problem with this approach is the size of the KV cache. Even with optimizations in the most recent LLMs, the KV caches will consume all the memory in the AI system.

Offloading to the rescue!

The NVIDIA Dynamo library has a KV cache manager that migrates KV caches from GPU memory to other available devices. It also implements KV reuse techniques that turn the KV cache into a KV pool shared between multiple sessions and users. That 1,000,000-token KV cache (~15GB) that we discuss moving across from the HBM is just a small fraction of a much larger KV pool.

The first stop is system memory. The DGX example supports 4TB of system memory with an aggregate bandwidth of 1 TB/s to the GPUs. With this much bandwidth, loading the KV cache for 1,000,000 tokens from CPU memory to GPU memory (in the ideal case) would take only 15 ms compared to recomputing it, which would take over 2 minutes, as we mentioned earlier.

This reduction in time is great for the user! After initial computation of the context, reloading from CPU memory is extremely fast and enables the interactive user experience.

CPU system memory still faces the same problem as GPU memory — limited capacity.

The next stop encompasses the local NVMe drives in the DGX server. With eight PCIe Gen5 NVMe drives (like the Micron 9550 high-performance Gen5 drive), the next tier of KV cache offload capacity can range from 30TB to 245TB, with an aggregate bandwidth of 112 GB/s.

While the storage layer is significantly slower than system memory, that 1,000,000-token KV cache will still only take 140 ms to load.

Using these offload and migration techniques improves the user experience by reducing the time to generate the first token. And it’s also great for total cost of ownership (TCO) since the GPUs spend more of their time generating output instead of redoing work they’ve done before.

High-performance storage enables long context inference

The generative AI landscape has shifted considerably in the past couple years. Where storage was previously an afterthought, we see that providing high-performance storage for an AI factory will be critical to enabling intelligent AI with a positive user experience.

At FMS last year and at GTC this spring, Micron showcased the performance of our upcoming PCIe Gen6 NVMe drive. These developments around long contexts and KV cache management show that having the fastest NVMe flash connected to the latest GPUs will be key to successfully deploying AI.